Thoughts

When Health AI Makes a Mistake, Who's Liable?

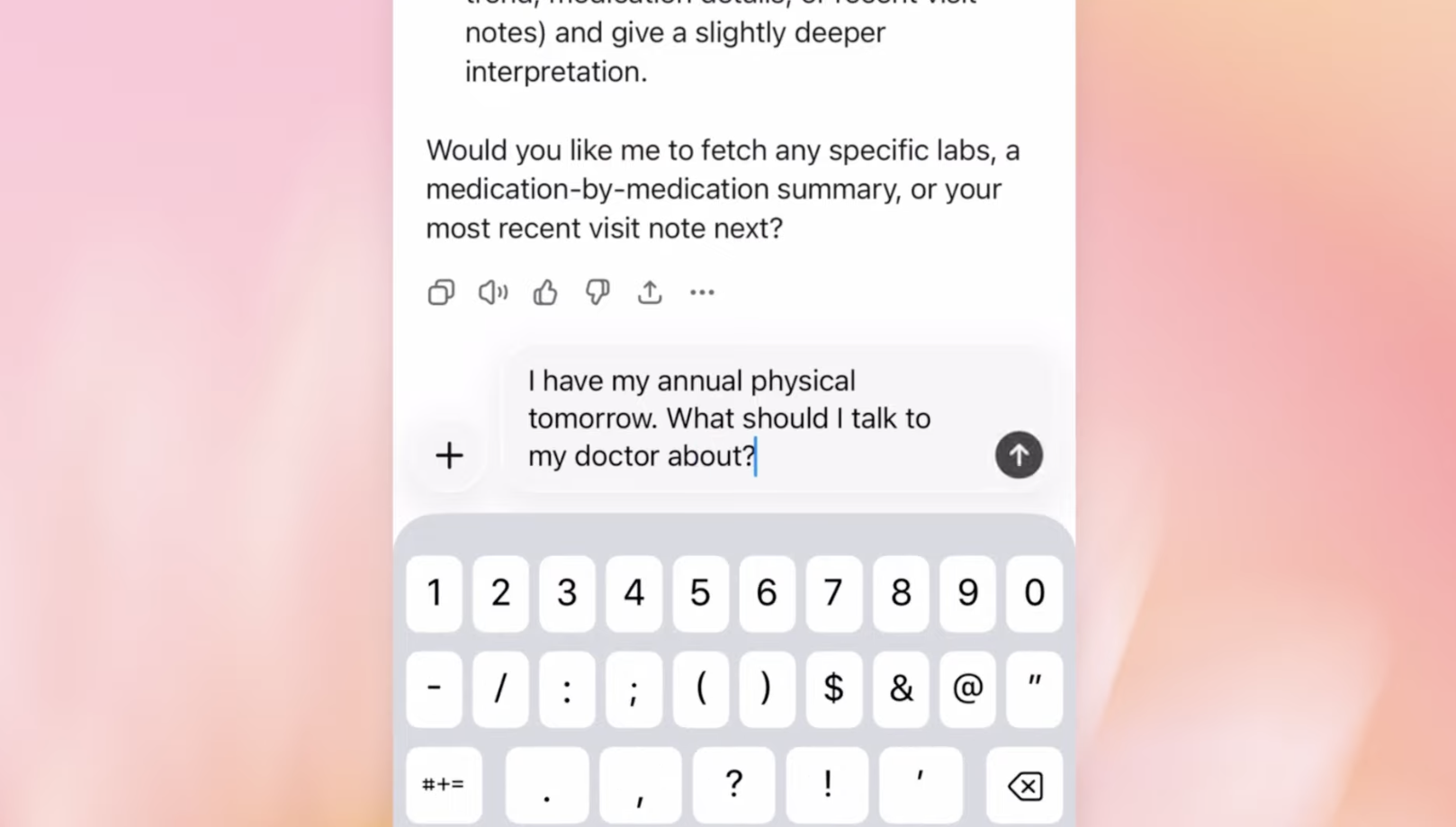

In the first week of January 2026, Utah, and OpenAI each drew a different line around health AI—and liability. Utah’s Doctronic pilot treats AI like a clinician, with malpractice coverage and preserved remedies. OpenAI adds medical-record syncing under unchanged disclaimers and a $100 cap.

From Exam Room to Living Room: The New Health System, Part 1

For the last 50 years, the engine of technology innovation has been a consumer engine. Consumers have steadily accumulated new health capabilities—including diagnosis, treatment, and monitoring—much faster than healthcare organizations. This has caused a decades-old, large-scale migration of health-related activity from the healthcare system to the consumer tech system. But in my experience speaking with hundreds of healthcare CEOs and board members, these migrations remain largely invisible to healthcare leadership.

Digital Coaches, Part III: FDA + Utah Accelerating the Consumer Health Shift

The FDA just updated its General Wellness guidance, allowing consumer devices to measure clinical parameters for coaching—no clearance required. The same week, Utah let AI renew prescriptions with no doctor. Both are doing the same thing: moving healthcare tasks out of traditional systems and into consumer channels.

AI in Coverage Decisions: We Need Guardrails, Not Prohibition

Lawmakers are moving to ban AI-only insurance denials, requiring human sign-off for every case. It sounds compassionate, but it locks us into the same slow, opaque, costly system. The smarter move is AI with guardrails — transparency, audits, and contestable rationales — for faster, clearer, more accountable decisions.

A Closer Look at FDA's AI Medical Device Approvals (2022)

FDA approvals of AI-enabled medical devices are accelerating—but not in the way you might expect. While new startups are entering the space, the real winners remain legacy giants like GE and Siemens. An analysis of the latest FDA data reveals a classic case of sustaining innovation, not disruption, as established players integrate AI to reinforce their dominance.