AI in Coverage Decisions: We Need Guardrails, Not Prohibition

States are moving to quickly ban AI — and the press is cheering them on

After the U.S. Senate removed a proposed national moratorium that would have blocked states from regulating AI, 29 legislatures introduced 130+ health-AI bills, many targeting claim denials and prior authorization.

Arizona state legislature

More specifically, legislators are trying to prevent insurers from letting AI deny claims without the review of a physician. A good example is Arizona's H2175, which states that AI “may not be used to deny a claim or a prior authorization” and that “a health care provider shall individually review each claim” if medical judgment is involved.

This process has been remarkably bipartisan: Arizona's bill passed unanimously in both houses, with roughly equal numbers of Democrats and Republicans supporting.

Press coverage, too, has been consistently supportive. As an example, a recent NBC News article on Arizona's bill provides supportive statements from the Arizona Medical Association, the sponsoring lawmaker, the American Medical Association, and a Harvard Law School professor. From the Arizona Medical Association:

“Patients deserve healthcare delivered by humans with compassionate medical expertise, not pattern-based computer algorithms designed by insurance companies.”

Across NBC, PBS, Fox, and local outlets, coverage was uniformly supportive — with not a single dissenting view. The unanimity is striking — perhaps the first time PBS and Fox News have found themselves on the same side of a health policy debate.

But I get this overwhelming support, I do. On the surface a universal human sign-off seems compassionate and smart. But the effort is misguided, and risks prolonging the same problems that make the existing, non-AI systems a real problem for patients, doctors, and insurers.

I understand why these laws exist

Before I argue against these bills, let me be clear: the concerns driving them are legitimate. Several current lawsuits suggest that insurers have deployed AI systems badly, and that patients have been harmed.

The most notorious example came to light in 2023, when a lawsuit revealed that UnitedHealthcare was using an AI algorithm called nH Predict to determine how long elderly patients should remain in post-acute care facilities. According to the complaint, the system was known to have a staggering 90% error rate, yet the insurer continued denying claims based on its output, knowing that only a tiny fraction of patients would contest the denial. Patients were allegedly forced to leave rehabilitation facilities before they were ready, or their families faced crushing out-of-pocket costs to continue care. And in February of this year, a federal judge allowed the lawsuit to proceed.

Similarly, Cigna is currently being sued for using an automated system called PXDX that allowed doctors to deny claims in bulk---reportedly reviewing cases at a rate of one every two seconds. While Cigna claimed doctors were making the final decisions, the speed pretty convincingly suggests the "review" was perfunctory at best.

These aren't hypothetical risks. If supported by the courts, they represent real failures that may have caused real harm. And they explain why lawmakers---and the public---are skeptical of letting insurers use AI without mandatory human oversight.

But prohibition just brings us back to square one

Here's where I part ways with the legislative response: these alleged failures appear to have been the result of bad faith, poor implementation, and inadequate oversight — not proof that AI is inherently unfit for coverage decisions. In both cases above, the problem wasn't that AI made the determination---it was that faulty systems were deployed without transparency or meaningful accountability.

Banning AI-only denials doesn't fix any of that. It just forces insurers to add a human signature to the process — a signature that, as the Cigna example shows, can be just as perfunctory and meaningless as an automated one. And we'll still have the same lack of transparency about how decisions are made, the same difficulty appealing denials, the same abysmal speeds, and the same high cost.

In other words, banning AI-only denials condemns us to the existing system that everyone already hates.

If we truly want to improve the system, the question isn’t whether to use AI, but how

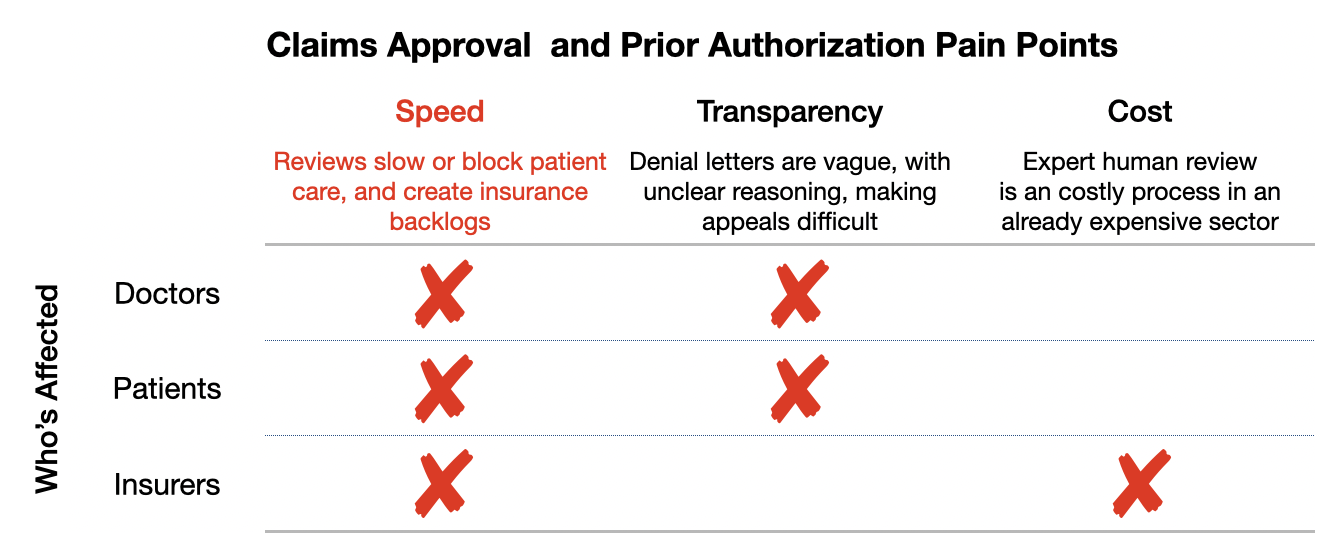

Frustrations with the existing human-mediated claims systems come down to

Speed — The universal frustration. Claims decisions take too long, slowing down care, disrupting doctors’ workflow, and delaying payment.

Transparency — Denial letters are vague, with unclear reasons for denial, making it impossible to know if the decision is medically sound.

Cost — The insurer’s primary concern. Manual processes and human review are expensive, and those costs ripple through the system to premiums and taxpayers.

The one thing doctors, patients, and insurers can all agree on: the current systems are too slow

What the legislators (and the media) are missing is that we can use well-designed AI to address all three issues.

Reviewing medical necessity determinations is a narrow, highly-structured task: reading documentation, checking it against coverage rules and practice standards. Modern LLMs already handle similar structured tasks in finance and logistics — and can do the same for claims.An AI system can also promote transparency. Automated systems are better than people at producing plain-language, structured, reproducible rationales, and they can do it 24/7/365. They can log which policy clause applied, what evidence was missing, and how the decision could be overturned. That's far more detail than a cursory physician denial letter. The solution isn't to remove AI; it's to specify the outputs we want and require AI systems to provide them.Further, we can engineer quality into these systems much more easily and cheaply than with people. We can build-in audits, sampling, and benchmarks, that ensure verifiable fairness and reproducibility. We can also create HIPAA-compliant ways to allow third-party audits including human audit, but probably AI reviewing AI.And this last point isn't theoretical: existing tools like Claimable, Fight Health Insurance, and the completely free Counterforce Health already exist to help patients generate appeal letters and contest denials in minutes. The Guardian has described this as an emerging "AI vs AI" arms race, where patients' tools counter insurers' tools. The key is to remove barriers to using these oversight AIs by standardizing access to decision packets and rationale and allowing these developers to battle it out to improve their products.

Free AI-generated claims denial appeals from Counterforce Health

A better path: automation with the right guardrails

The future of our slow, opaque, and costly system shouldn’t be to burden doctors with more administrative tasks that pull them from patient care. Modern LLMs can handle the narrow, bureaucratic task of reviewing claims against coverage rules. By requiring AI to provide plain-language denial reasons, instantly accessible to doctors and patients, and enforcing continuous audits by regulators, we can ensure fairness and transparency. This lets doctors focus on care, patients get clear answers, and insurers cut costs — a win for all.

Build, don’t ban

This is no different from how we’ve approached autonomous vehicles. Few argue we should ban self-driving cars outright — because that would eliminate the chance to improve a technology with huge potential to save lives. Instead, we regulate and monitor them, creating safety benchmarks and oversight while allowing the technology to evolve. Insurance coverage decisions deserve the same approach: guardrails, not prohibition.

Who will be first with instant, contestable, transparent denials?

Banning AI-only denials feels humane, but it entrenches the worst features of the old system: delay, opacity, and cost. The real solution is not to put more doctors into the administrative loop, but to design AI systems that are fast, transparent, and auditable and to guarantee that patients and doctors have the documentation and tools needed to appeal effectively, including with AI.

Patients don't need slower denials with a human rubber stamp. They need faster, clearer, and more contestable decisions — and for the first time, AI makes that achievable. The first insurer to deliver instant, contestable, transparent denials will win trust and market advantage — and regulators should be enabling that, not blocking it.